But Mary treasured up all these things

and pondered them in her heart.

—Luke 2:19

Pon·der: / pändər / verb / to think deeply (about); deliberate

Anyone who has known me even briefly notices that I am a voracious thinker. And if you’ve been following this project of mine, you know just how voracious. I think about everything. If it enters my neural networks, it will get shifted for unseen (and sometimes unusual) patterns, examined, analyzing the what, where, when, how, and why of the intellectual landscape, thinking, ever thinking. In a word, I ponder. I ponder a lot!

What’s more, like my pal, Mike Montaigne, no topic is unworthy of attention. That man pondered everything from farts and fear to death and the human condition, and then he wrote about it. He essayed. In fact, he invented the form, and every high schooler ever since who has had to master the 5-paragraph essay has—at one time or another—cursed his name, even if they didn’t know it. Even those of us who developed a passion for authorship and the written word have cursed him because we didn’t always have control over what we had been assigned to essay about!

Well, I’ve been at it again, pondering, and what I have been pondering about are two things that at first glance may appear to have nothing to do with one another: Artificial Intelligence (AI) and the Thwaites Glacier. But if you bear with me, I will essay and reveal the hidden connection, including the unusual ethical conundrum it has evoked for me as an educator.

The topic of AI, of course, is nothing new to my pondering. Regular readers know that I have discussed everything from its potential impact on education to its danger to the very concept of truth. In fact, my very first post for the LoC project was about catechism and AI as a tool for learning. But what’s got me pondering AI yet again is a recent article in the September 2023 issue of Scientific American about how AI models are demonstrating knowledge about things no one has told them. They are effectively learning on their own, and the very people who invented them are—and I quote—“baffled as to why.” In fact, “a growing number of tests suggest these AI systems develop internal models of the real world, much as our own brain does” and “that GPT and other AI systems perform tasks they were not trained to do, giving them ‘emergent abilities’ ” (such as the ability to execute code they have written for themselves independent of the original human who created them!). Indeed, “researchers are finding that these systems seem to achieve genuine understanding of what they have learned.”

So as theologian, Nadia Bolz-Weber put it (after discovering her own books were being used to train an AI), “have we created WALL-E or HAL? Likely both. Why? Because we are both.” The bottom line is that what it means to be human is complex, and therefore, anything we create that resembles being human will also be complex. But that’s why MIT physicist, Max Tegmark, has authored an open letter (which I encourage everyone to read) calling for a six month moratorium on all AI instruction so that we might decide how best to regulate this exploding field before we risk Skynet (of The Terminator movies franchise fame) from becoming a reality and generating actual explosions. However, Tegmark is extremely pessimistic, sharing with The Guardian that he believes the economic competition is too intense for tech executives to pause AI development to consider their potential risks. As the title to the piece suggests, he thinks we are in a “race to the bottom” and that this djinn is not going back into its bottle.

And that’s what brings me to the Thwaites Glacier: because a similar “race to the bottom” is happening there, courtesy of climate change.

For those unfamiliar with the Thwaites Glacier, it is located in Antarctica (see map), and like much of the large ice bodies on this planet, it is actively melting faster than originally anticipated due to rising atmospheric temperatures.

However, what makes this particular large chunk of ice more significant—and has earned it the nickname, the “Doomsday Glacier”—is that its melting is increasing exponentially and threatens a potential full collapse in the very near future. If that happens, the most immediate consequence is a 65 cm rise in sea levels (a little over two feet) taking place within a matter of years, not decades—Goodbye Miami Beach and nearly every island nation in the world—and even more terrifying is that the Thwaites Glacier presently holds back the West Antarctic Ice Sheet from entering the sea. With Thwaites gone, an ice mass roughly the size of India will melt into the water over the next century, resulting in a sea level rise of 3.3 meters (nearly 11 feet)—Goodbye Florida!

By now, how AI and the Thwaites Glacier managed to merge in my pondering may be becoming clearer. Both situations currently represent ways in which humans seem to be actively working to crash and burn as a species, and our present mishandling of both poses a real existential threat to our well-being. But what finally linked them in my mind was when I read the review of Elizabeth Rush’s The Quickening. My mother had brought this book to my original attention (full disclosure: I have not seen a page yet), and she had shared how it is the story of the most recent research expedition to Thwaites (the source of the sea-level data I’ve been sharing—don’t read the full report if you want to sleep in the near future). Well, when I read the review, what interested me most was learning that much of the narrative of the book involves Rush’s exploration of her desire and uncertainty about potentially becoming a parent. Specifically (as is true of many couples these days), she spends time pondering whether it is ethical to bring a child into a world so threatened by the current “race to the bottom” documented by the expedition–and which, I would argue, is happening in both our social and ecological worlds.

Bringing me to what triggered my unexpected conundrum: given my own professional relationship with children, it occurred to me to wonder whether what I do for a living is ethical anymore. Is it ethical to work to prepare children for a future that simply may not be? I want to say the answer is “yes,” and having had at least four of my former students that I know of die already, I am aware that all my work and that of every other educator can be cut short by tragedy. But what if all that we are looking at is nothing but tragedy?

I’m too much the historian not to know that societies and civilizations come and go—with a variety of different size and scale dark-ages in between—and I’m too much the biologist not to know that life has managed to persevere through every mass extinction that has ever happened on this planet (and that the one we are currently causing is nowhere near the scale of the Permian event that wiped out 90% of all life at the time). Hence, I know that some manner of complex life—and maybe even society—will make it through the evolutionary bottleneck in which we find ourselves.

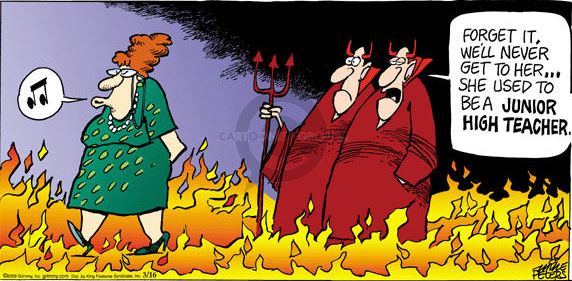

But that still leaves me wondering whether what I do for a living is ethical. Or more precisely, it leaves me wondering whether how I am preparing children to live in their futures is ethical. Should I be preparing them to correct their elders’ errors, or should I be preparing them to be a remnant people in an apocalyptic landscape? Should I be preparing them to fix a broken world, or should I be preparing them simply to survive in the brokenness? It’s like the cartoon I used in my recent post: should I be teaching them to program space robots or how to sharpen a stick with a stone? Which teaching will enable them not simply to survive but survive with some degree of positive meaning in their lives?

I wish I had a clear and obvious answer, but I don’t. I suspect it is probably a “both/and” rather than an “either/or” situation. But for now, all I can do is keep muddling through and, like Luke’s Mary—who was dealing with all manner of craziness in that barn that night!—keep pondering these things in my heart. Because it is only out of the heart, out of the love I have for my students, that I know that I can find a resolution to my conundrum that is my best for them. As Luther said at the conclusion of his ecclesiastical trial, “Hier bleib ich; ich kann nicht anders.” Here I stand; I can do no other.

References

Bolz-Weber, N. (Oct. 22, 2023) My Robot, My Self: On AI, Religion, and “What It is to be Human.” The Corners. https://thecorners.substack.com/p/my-robot-my-self?utm_campaign=email-half-post&r=2y0by&utm_source=substack&utm_medium=email.

Kolbert, E. (2014) The Sixth Extinction: An Unnatural History. New York: Picador.

Milmo, D. (Sept. 21, 2023) AI-focused Tech Firms Locked in ‘Race to the Bottom’ Warns MIT Professor. The Guardian. https://www.theguardian.com/technology/2023/sep/21/ai-focused-tech-firms-locked-race-bottom-warns-mit-professor-max-tegmark.

Musser, G. (Sept. 2023) An AI Mystery. Scientific American. Pp. 58-61.

Rush, E. (2023) The Quickening: Creation and Community at the Ends of the Earth. Minneapolis: Milkweed.